OpenAI Sora hit 1M downloads in 5 days, making 10-second videos. EA uses AI for Battlefield 6. UK CMA gave Google strategic market status. Zelda Williams wants no deepfakes of her father.

Ever feel like the world is spinning faster? Like something new pops up every other minute? Well, you're not wrong, especially when it comes to AI. Just this past week, a new AI headshot generators tool for video creation landed, and it's already making waves. It's truly a wild ride. This isn't just about cool new tech; it's about big changes coming for jobs, for how we make stuff, and even for how governments keep things fair.

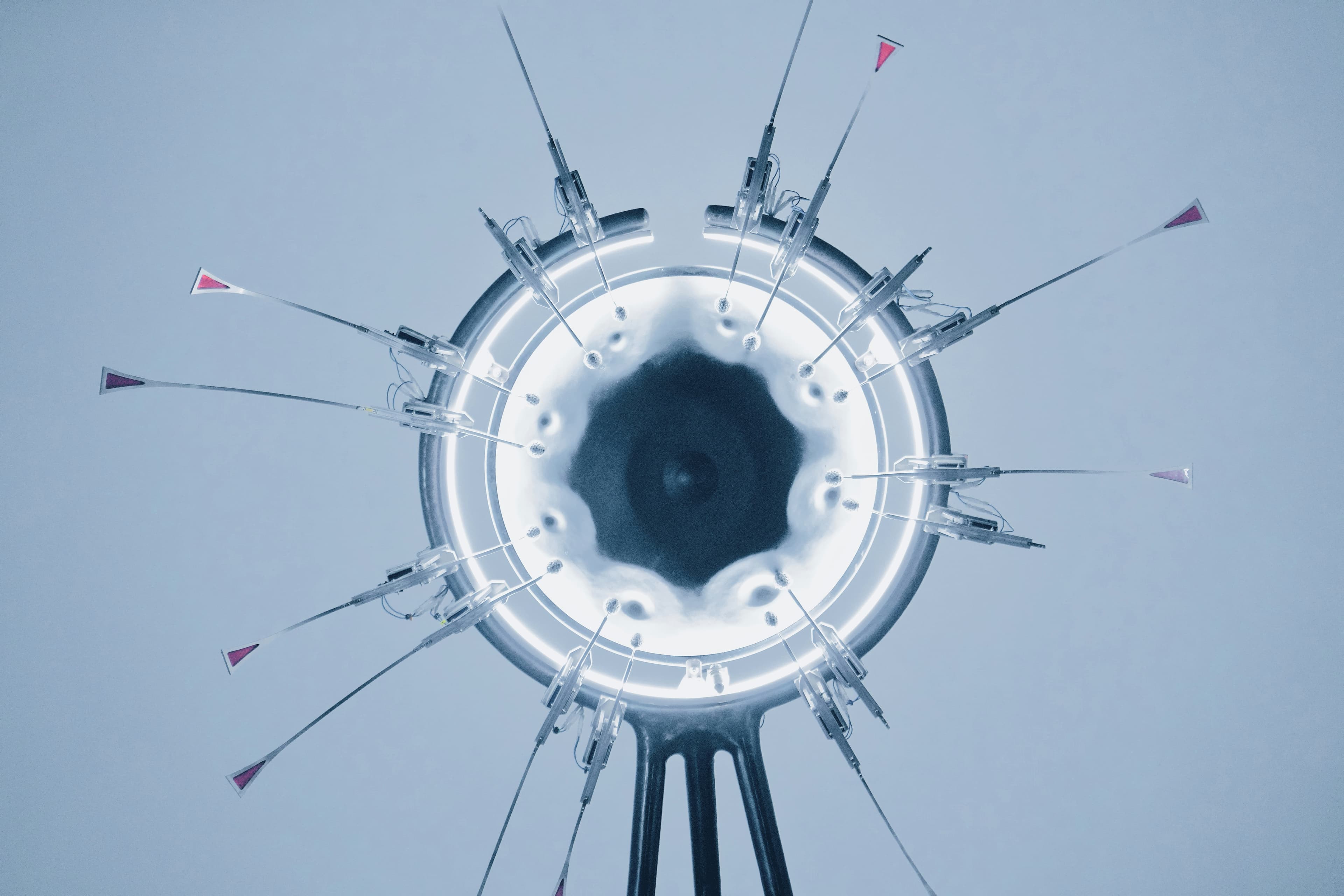

Picture this: you type a few words, and poof, you get a realistic 10-second video. OpenAI's Sora just did that, and it hit a million downloads in less than five days. That's faster than ChatGPT. This app, topping the Apple App Store charts, means anyone can be a video creator. Think of all the TikToks and Instagram reels. It’s like magic, right?

But here's the kicker. This quick creation has a flip side. We are seeing videos of deceased celebrities, like Michael Jackson and Tupac Shakur. Zelda Williams, Robin Williams' daughter, even asked for a stop to using her father's likeness. OpenAI says you can ask for dead public figures' images not to be used, but it's all a bit hazy.

And then there are the copyright headaches. Sam Altman, OpenAI's boss, was shown in a deepfake with Pokémon, joking about Nintendo suing. He even grilled Pikachu in another video! Nintendo hasn't acted yet, but many companies are already suing AI firms over copyright. OpenAI says it's working on giving rights holders more control. It's a delicate dance, to say the least.

While Sora is out there making headlines, the gaming world is quietly using AI too. Take Battlefield 6, for example. This military shooter is a big deal for Electronic Arts (EA). Four studios worked on it. They didn't put AI-generated content directly into the game that players see.

But Rebecka Coutaz, a VP general manager, said they use generative AI in "preparatory stages." This lets their teams have more time and space to be creative. Fasahat 'Fas' Salim, a design director, isn't scared of AI. He thinks it’s just another tool. He asks, "how can we leverage that to take our games to the next level?" So, AI helps these massive games look incredible and run smoothly, even if you don't always spot it.

With all this AI growth, someone needs to keep an eye on things, right? The UK regulator, the Competition and Markets Authority (CMA), just gave Google "strategic market status." This is a big deal. It means the CMA can step in with "proportionate interventions" if needed.

Will Hayter, from the CMA, said Google's search dominance is "unquestionable." Google isn't thrilled. Oliver Bethell, Google's competition boss, worried it might "inhibit UK innovation and growth." He also said Google Search adds billions to the UK economy. But consumer groups, like Which?, called it "an important step." They want more choice for search engines. This move shows that governments are starting to think hard about how these huge tech companies, especially those building AI, affect everyone.

Beyond the videos and games, there's a bigger, almost philosophical, talk happening. When will AI match or even surpass human intelligence? Some tech billionaires, like Mark Zuckerberg, are reportedly "doom prepping." He's building a huge compound with an underground shelter in Hawaii. Even Ilya Sutskever, a co-founder of OpenAI, reportedly suggested building a bunker for top scientists before releasing artificial general intelligence (AGI).

Sam Altman himself thinks AGI will arrive "sooner than most people in the world think." Others, like Sir Demis Hassabis, put it within five to ten years.

But not everyone agrees. Dame Wendy Hall, a computer science professor, rolls her eyes at the idea. She says AI is amazing, "but it's nowhere near human intelligence." Neil Lawrence, a machine learning professor, calls AGI "absurd." He thinks it's a distraction from the real ways AI can help people now. Current AI tools are smart at patterns but don't "feel" or have "meta-cognition." This debate shows a deep divide in how we see our future with AI.

So, what does all this mean for us? For creators? For our wallets? It’s a bit like standing at a crossroads. Some fear a "creator crisis" where AI takes jobs. Others see AI as a powerful co-pilot, helping humans do more and better work. EA's use of AI in Battlefield 6 for behind-the-scenes work is a good example. The "best AI headshot generators" help people create professional images without a photographer. It’s about leveraging these tools.

To navigate this, everyone needs to work together. Regulators, tech companies, and creators all have a part to play. We need clear rules. We need ways to know if something is AI-made. We need to protect creative rights. The promise of this technology is huge, but only if we use it wisely and ethically. What do you think? How will AI change your world?

Q: Will AI replace human artists and creators?A: It's a big worry, but many experts think AI will change jobs more than replace them. Think of it as a powerful tool that helps creators do new things. It can speed up tasks, letting humans focus on bigger, more creative ideas.

Q: What does "strategic market status" for Google mean?A: This label from the UK regulator means they can step in to make sure Google's dominance doesn't hurt competition. They might ask Google to offer more search engine choices or give publishers more control over their content. It's about keeping the market fair.

Q: How can I tell if a video or image is AI-generated?A: It's getting harder to tell, but look for odd details or unnatural movements. Companies are working on "AI-traceability standards" and watermarks. As the tech grows, tools to spot AI content will likely improve, helping us sort fact from fiction.

This article is part of ourTech & Market Trendssection. check it out for more similar content!